Winning an election with 22% of the popular vote

A presidential candidate could be elected with as a little as 21.8% of the popular vote by getting just over 50% of the votes in DC and each of 39 small states. This is true even when everyone votes and there are only two candidates. In other words, a candidate could lose with 78.2% of the popular vote by getting just under 50% in small states and 100% in large states.

The optimal set of states to take (the one that lets a candidate win with the smallest popular vote) is not the N states with the smallest population. It's also not the N states with the smallest value for (population/electors), which would be optimal if you could get exactly 270 electoral votes that way.

The optimal solution happens to get exactly 270 electoral votes. In this solution, the winner takes DC, the 37 smallest states, the 39th smallest state, and the 40th smallest state. (The winner takes Alabama, Alaska, Arizona, Arkansas, Colorado, Connecticut, Delaware, DC, Hawaii, Idaho, Indiana, Iowa, Kansas, Kentucky, Louisiana, Maine, Maryland, Minnesota, Mississippi, Missouri, Montana, Nebraska, Nevada, New Hampshire, New Mexico, North Carolina, North Dakota, Oklahoma, Oregon, Rhode Island, South Carolina, South Dakota, Tennessee, Utah, Vermont, Virginia, Washington, West Virginia, Wisconsin, and Wyoming.)

Read on for my assumptions and algorithm.

Continue reading "Winning an election with 22% of the popular vote"Simple JS learning environment

Leonard Lin is teaching animation students basic programming so they'll be able to use Maya's MELScript and Flash's ActionScript. He chose JavaScript as the first language for his students because JavaScript and ActionScript are both variants of ECMAScript.

I made a simple JS learning environment to cut out the save-switch-reload cycle and the "magic" HTML that surrounds a short JS program. If an error occurs, it highlights the line.

I reused a lot of code and UI ideas to make it. The overall UI comes from the Real-time HTML editor, the print() function comes from the JavaScript Shell, and the error-selecting idea and code come from the "blogidate XML well-formedness" bookmarklet. If you want to look at the code for the JS env, most of it is in the "buttons" frame.

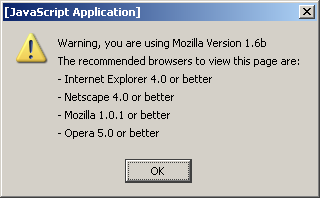

Mozilla 1.6b < Mozilla 1.0.1?

This USPS page uses the expression (browserName == "Mozilla" && browserVersion >= 1.0) to recognize acceptable versions of Mozilla. The string "1.6b" becomes NaN when coerced to a number, so the expression is false if you're using Mozilla 1.6b. If you're using Mozilla 1.5 instead of 1.6b, you won't see the warning. Ironically, "1.0.1", the minimum version they claim to support, coerces to NaN.

If the site had used parseFloat instead of implicit coercion, it wouldn't have hit this problem. parseFloat("1.6b") returns the number 1.6.

Google Cache and slow CSS

If you use Google Cache when a server isn't responding, and the page uses an external style sheet, you won't be able to see the cached page. The reason is that most browsers block page display while waiting for the style sheet to load, and Google doesn't cache CSS or images. This limits the usefulness of Google's cache, especially now that CSS is popular.

Google could cache CSS along with HTML. To avoid spidering and storing every page's CSS, Google could proxy CSS loads for Google Cache users, and have the proxy time out after 5 seconds. But both of these solutions might use a lot of bandwidth.

Google could add code to cache pages to make CSS load later or in a non-blocking fashion. This has the disadvantage that when the server is responding, the page will be presented unstyled for a split-second. Since some Google users use the cache even when the site isn't down, this would be bad.

I hoped there would be a way for Google to add code to cache pages to stop blocking loads that are taking too long. JavaScript can detect a slow load: call setTimeout above the LINK element, and call clearTimeout in another SCRIPT element below the LINK. But the function setTimeout activates can't cancel the load by disabling the style sheet, changing the LINK's href, or removing the LINK element from the document. Browser makers didn't anticipate JS trying to cancel a blocking load. (Removing the LINK element from the document even crashes IE.)

Another solution is for browsers to make CSS loads block less:

- 84582#c11 - CSS loads should stop blocking layout if they take more than a few seconds

- 220142 - Pressing Stop while waiting for CSS should finish displaying what has been loaded before stopping.

- 224029 - JS can't cancel blocking load of a style sheet

Smaller Google home page

I edited Google's home page to make it as small as I could without changing how it looks. The result is 30% smaller and works slightly better.

Most of the changes that weren't simple deletions involved the code for the tabs above the search box.

Perception experiment videos

The Visual Cognition Lab at the University of Illinois has some cool videos.

In one set of videos, "Real-world person-change events", an experimenter asks a subject for directions. Two people carrying a door come between the experimenter and the subject. While the door is between them, the experimenter switches with one of the door-carriers. Subjects noticed the person change between 35% and ~100% of the time, depending on whether the experimenter was part of the same social group as the subject.

The "Gradual changes to scenes" videos are fun. Over a period of about 10 seconds, part of an image changes. Unfortunately, video compression artifacts make it easy to see the change if you focus on the correct portion of the image. I wrote Gradual image change in JavaScript with the intent of submitting it to the lab. The JavaScript works by superimposing two images and varying the transparency of the top image in increments of 1%. It works well in IE 6.0, but it's about three times too slow in Mozilla Firebird on my 1.6 GHz computer.