Releasing jsfunfuzz and DOMFuzz

Tuesday, July 28th, 2015Today I'm releasing two fuzzers: jsfunfuzz, which tests JavaScript engines, and DOMFuzz, which tests layout and DOM APIs.

Over the last 11 years, these fuzzers have found 6450 Firefox bugs, including 790 bugs that were rated as security-critical.

I had to keep these fuzzers private for a long time because of the frequency with which they found security holes in Firefox. But three things have changed that have tipped the balance toward openness.

First, each area of Firefox has been through many fuzz-fix cycles. So now I'm mostly finding regressions in the Nightly channel, and the severe ones are fixed well before they reach most Firefox users. Second, modern Firefox is much less fragile, thanks to architectural changes to areas that once oozed with fuzz bugs. Third, other security researchers have noticed my success and demonstrated that they can write similarly powerful fuzzers.

My fuzzers are no longer unique in their ability to find security bugs, but they are unusual in their ability to churn out reliable, reduced testcases. Each fuzzer alternates between randomly building a JS string and then evaling it. This construction makes it possible to make a reproduction file from the same generated strings. Furthermore, most DOMFuzz modules are designed so their functions will have the same effect even if other parts of the testcase are removed. As a result, a simple testcase reduction tool can reduce most testcases from 3000 lines to 3-10 lines, and I can usually finish reducing testcases in less than 15 minutes.

The ease of getting reduced testcases lets me afford to report less severe bugs. Occasionally, one of these turns out to be a security bug in disguise. But most importantly, these bug reports help me establish positive relationships with Firefox developers, by frequently saving them time.

A JavaScript engine developer can easily spend a day trying to figure out why a web site doesn't work in Firefox. If instead I can give them a simple testcase that shows an incorrect result with a new JS optimization enabled, they can quickly find the source of the bug and fix it. Similarly, they much prefer reliable assertion testcases over bug reports saying "sometimes, Google Maps crashes after a while".

As a result, instead of being hostile to fuzzing, Firefox developers actively help me fuzz their code. They've added numerous assertions to their code, allowing fuzzers to notice as soon as the smallest thing goes wrong. They've fixed most of the bugs that impede fuzzing progress. And several have suggested new ways to test their code, even (especially) ways that scare them.

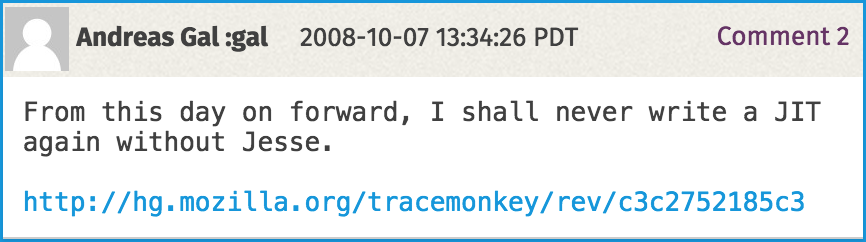

Developers working on the JavaScript engine have been especially helpful. First, they ensured I could test their code directly, apart from the rest of the browser. They already had a JavaScript shell for running regression tests, and they added a --fuzzing-safe option to disable the more dangerous testing functions.

The JS team also created a large set of testing functions to let me control things that would normally be based on heuristics. Fuzzers can now choose when garbage collection happens and even how much. They can make expensive JITs kick in after 2 loop iterations rather than 100. Fuzzers can even simulate out-of-memory conditions. All of these things make it possible to create small, reliable testcases for nasty classes of bugs.

Finally, the JS team has supported differential testing, a form of fuzzing where output is checked for correctness against some oracle. In this case, the oracle is the same JavaScript engine with most of its optimizations disabled. By fixing inconsistencies quickly and supporting --enable-more-deterministic, they've ensured that differential testing doesn't get stuck finding the same problems repeatedly.

Please join us on IRC, or just dive in and contribute! Your suggestions and patches can have a large impact: fuzzer modules often act together to find complex interactions within the browser. For example, bug 893333 was found by my designMode module interacting with a <table> module contributed by a Firefox developer, Mats Palmgren. Likewise, bug 1158427 was found by Christoph Diehl's WebAudio module combined with my reflection-based API-discovery modules.

To the next 6450 browser bug fixes!